Self-Supervised Surround-View Depth Estimation with Volumetric Feature Fusion

2022.11.08

36th annual Conference on Neural Information Processing Systems (NeurIPS 2022)에 실린 김중희, 허준화, Tien Nguyen, 정성균 저자의 “Self-supervised surround-view depth estimation with volumetric feature fusion” 논문을 소개합니다. NeurIPS는 세계적인 머신러닝(ML)·AI 학회로 가장 최신의 머신러닝 리서치 기술에 대해 학계와 산업계를 포함한 다양한 연구자들이 참여하여 소개하고 있습니다.

Conference

- 36th annual Conference on Neural Information Processing Systems (NeurIPS 2022)

- NeurIPS is one of the biggest machine learning and artificial intelligence conference, where researchers from both academia and industry gather to share the latest machine learning research.

- The paper “Self-supervised surround-view depth estimation with volumetric feature fusion” written by Jung-Hee Kim, Junwha Hur, Tien Nguyen, Seong-Gyun Jeong, has been accepted to the NeurIPS 2022.

- Click the link below for details.

➠ https://openreview.net/forum?id=0PfIQs-ttQQ

➠ https://github.com/42dot/VFDepth

Publication

- Title: Self-supervised surround-view depth estimation with volumetric feature fusion

- Authors: Jung-Hee Kim, Junhwa Hur, Tien Nguyen, Seong-Gyun Jeong

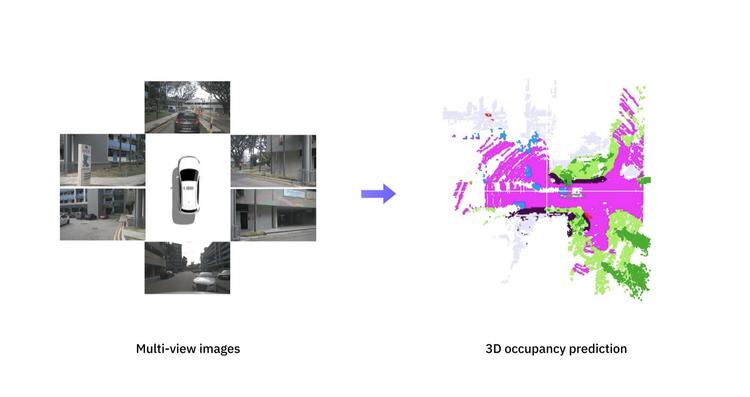

- Abstract: We present a self-supervised depth estimation approach using a unified volumetric feature fusion for surround-view images. Given a set of surround-view images, our method constructs a volumetric feature map by extracting image feature maps from surround-view images and fuse the feature maps into a shared, unified 3D voxel space. The volumetric feature map then can be used for estimating a depth map at each surround view by projecting it into an image coordinate. A volumetric feature contains 3D information at its local voxel coordinate; thus our method can also synthesize a depth map at arbitrary rotated viewpoints by projecting the volumetric feature map into the target viewpoints. Furthermore, assuming static camera extrinsics in the multi-camera system, we propose to estimate a canonical camera motion from the volumetric feature map. Our method leverages 3D spatio- temporal context to learn metric-scale depth and the canonical camera motion in a self-supervised manner. Our method outperforms the prior arts on DDAD and nuScenes datasets, especially estimating more accurate metric-scale depth and consistent depth between neighboring views.

Jung-Hee Kim | AD Algorithm

I’m in charge of developing 3D vision models for autonomous vehicles.

.png&w=750&q=75)

.png&w=750&q=75)

.png&w=750&q=75)

.png&w=750&q=75)

.jpg&w=750&q=75)

.png&w=750&q=75)

.png&w=750&q=75)