Autonomous

Driving

Driving

MCMOT : multi-camera multi-object tracking

자율주행 시스템은 여러 대의 카메라를 활용해 주변 환경을 인식합니다. 따라서 카메라 시점을 가로질러 움직이는 객체를 인식하려면 동일한 트랙 ID를 유지하는 것이 중요합니다. 우리는 3개의 전면 카메라로 캡처한 객체에 고유한 트랙 ID를 할당하는 주석이 달린 데이터 세트를 제공합니다. 전면 중앙에 있는 카메라의 화각(FOV)은 60도이고, 전면(왼쪽과 오른쪽)에 있는 두 카메라의 화각(FOV)은 120도입니다. 전면 3개의 카메라 모두 1920x1208의 해상도를 가지고 있습니다. MCMOT 데이터 세트의 카메라 이름은 다음과 같습니다.

- camera_front_center_fov60

- camera_front_left_fov120

- camera_front_right_fov120

Download the MCMOT Dataset

Label Format

JSON 포맷의 annotation은 아래와 같습니다:

{

"filename": "0029_camera_front_center_fov60.jpg", // image file name

"objects": [

{

"id": 1, // assign unique integer for a same object

"geometry": // coordinates of 4 vertices for each faces

{

"front": null,

"left": null,

"rear": [[x1, y1], [x1, y2], [x2, y2], [x2, y1]],

"right": null

},

"type": "Pedestrian", // car, pedestrian, bus, truck, two wheeler, misc.

"visibility": "Fully_visible"

},

{

{

"filename": "0029_camera_front_center_fov60.jpg", // image file name

"objects": [

{

"id": 1, // assign unique integer for a same object

"geometry": // coordinates of 4 vertices for each faces

{

"front": null,

"left": null,

"rear": [[x1, y1], [x1, y2], [x2, y2], [x2, y1]],

"right": null

},

"type": "Pedestrian", // car, pedestrian, bus, truck, two wheeler, misc.

"visibility": "Fully_visible"

},

{ The tables below describe the annotation specifications. Table 1 and 2 show descriptions and rules for annotation types,

geometry and attributes.

geometry and attributes.

| Type | Description |

|---|---|

| Car | Passenger car, i.e., sedan or SUV, etc. |

| Bus | Vehicle can carry more than 12 people |

| Truck | All types of trucks except tow trucks |

| Two wheeler | An object that runs on two wheels and a person who is with it, i.e., motorcycle, scooter, moped, bicycle, kick_scooter, stroller, etc. |

| Pedestrian | Every person running, walking, sitting or standing. |

| Emergency car | Police car, ambulance, fire engine, tow truck, etc. |

| Misc. | Other kinds of movable objects on the road |

| Table 1 : Type description for moving object | |

The annotation result consists of geometries and attributes which are defined as:

| Group | Subgroup | Data |

|---|---|---|

| Geometry | Rear face | List of 4 vertices (rectangle) |

| Front face | List of 4 vertices (rectangle) | |

| Left face | List of 4 vertices (trapezoid) | |

| Right face | List of 4 vertices (trapezoid) | |

| Attributes | ID | Assigned unique integer for identifying same objects on overall frames and cameras |

| Visibility level | Fully_visible - 100 % Weakly_occluded/truncated - 60~99 % Mostly_occluded/truncated - 30~59 % Occluded/truncated - 0~29 % | |

| Table 2 : Geometry and attributes description for moving object | ||

We are interested in all types of visible objects such as cars, buses, trucks, two wheelers, pedestrians, emergency vehicles,

and other moving and stationary objects. Additionally, if the same object is observed from multiple cameras, the object is

given the same ID.

and other moving and stationary objects. Additionally, if the same object is observed from multiple cameras, the object is

given the same ID.

Folder Structure

Training set와 test set 제공됩니다. 시나리오마다 이미지(.jpg)와 해당 label(.json)이 있지만 test dataset에는 object ID 정보가 없습니다.

MCMOT /

└── train/

├── images/

├── scenario_name 1/

├── 0020_camera_front_center_fov60.jpg

├── 0020_camera_front_left_fov120.jpg

├── 0020_camera_front_right_fov120.jpg

├── labels/

├── scenario_name 1/

├── 0020_camera_front_center_fov60.json

├── 0020_camera_front_left_fov120.json

├── 0020_camera_front_right_fov120.json

└── test/

├── images/

├── scenario_name 2/

├── labels/

├── scenario_name 2/ Citation

MCMOT 활용시 아래 citation을 이용해주세요.

@misc{mcmot_42dot,

title = "42dot MCMOT dataset",

url = "https://www.42dot.ai/akit/dataset/mcmot"

} Tutorial

MCMOT Dataset Tutorial

These are some tools to utilize the MCMOT (Multi-Camera Multi-Object Tracking) dataset.

To run this tutorial, please download the MCMOT dataset from https://42dot.ai/akit/dataset/ and install the following packages: matplotlib, numpy,PIL, opencv_python andscipy.

To run this tutorial, please download the MCMOT dataset from https://42dot.ai/akit/dataset/ and install the following packages: matplotlib, numpy,PIL, opencv_python andscipy.

In [1]:

# !sudo apt-get install tree # !pip install opencv-python

After download MCMOT dataset, you can find tutorials folder.

In [2]:

!tree -L 2

.

├── Poppins-Bold.ttf

├── contents

│ ├── fig1.jpg

│ └── fig2.png

├── eval.py

├── mcmot_tutorial.ipynb

├── plot_mcmot.py

└── samples

├── draw_samples

├── ground_truth_sample

├── prediction_label_sample

└── submission_folder_tree_example

6 directories, 6 filesLoad Image and Draw Annotations

We provide sample images, annotations and python script to view the annotations on the images.

In [3]:

1 % 20 % 41 % 60 % 80 %

In [4]:

from IPython.display import Image

In [5]:

Image("img_res/draw_sample/f7860171059d0d7af759358451b0bcd3/0151_camera_front_left_fov120.jpg")

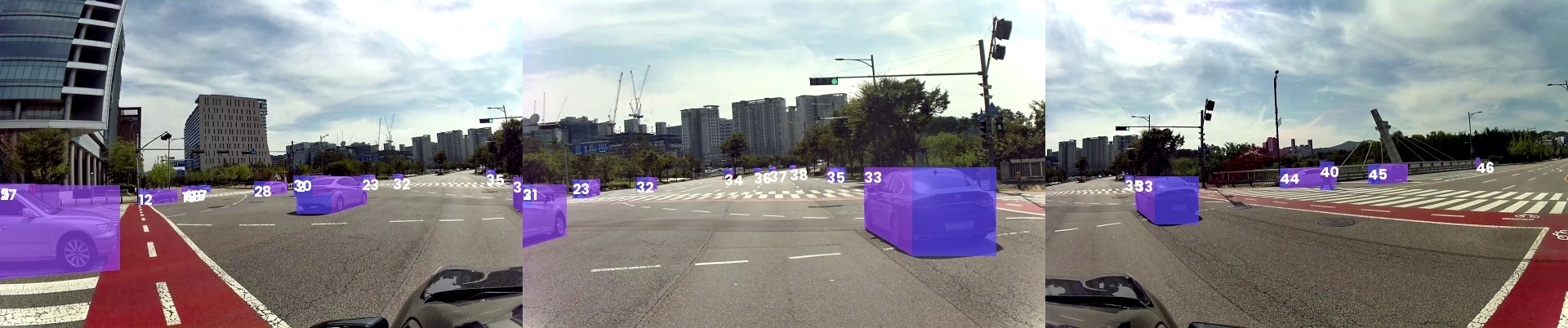

We assign the same annotation track ID to the same object of the data acquired from the three front cameras. The object with ID 35, a car is visible on all three cameras identifying the same object.

In [6]:

Image("img_res/draw_sample_concat/f7860171059d0d7af759358451b0bcd3/f7860171059d0d7af759358451b0bcd3_0130.jpg")

Evaluate IDF1 metric

We provide ground truth samples and prediction label samples.

In [7]:

!tree samples -L 2

samples

├── draw_samples

│ ├── images

│ └── labels

├── ground_truth_sample

│ ├── 091f8cef55442f47239d6ad85ac5d319

│ └── b63682f5111862fa731682eac7863471

├── prediction_label_sample

│ ├── 091f8cef55442f47239d6ad85ac5d319

│ └── b63682f5111862fa731682eac7863471

└── submission_folder_tree_example

└── sample_submit.zip

10 directories, 1 fileIf you want to see the specification of evaluation metrics, please see contents/fig1.jpg and contents/fig2.png.

In [8]:

!python eval.py --gt_root samples/ground_truth_sample --val_root samples/prediction_label_sample

{'weighted_IDF1': 99.10083493898523, 'total_IDTP': 3086, 'total_IDFN': 28}To enter the challenge, you must submit inference on the test dataset (MCMOT/test/images and MCMOT/test/labels). There are three scenes and their names are:

In [9]:

!tree ../test/labels -L 1

../test/labels ├── 1fcb7ae96a6c6c5eefe088f37a7e280e ├── 7f1a61133eeeaa7a3f98bb65303548a7 └── edc1a8dc013a842e8d2b43016766689e 3 directories, 0 files

ID information is not provided in the labels folder. Your task is to assign a unique integer id to each object for every frame. Do not modify other properties including 'geometry', 'type' and 'visibility'.

In [10]:

!cat ../test/labels/1fcb7ae96a6c6c5eefe088f37a7e280e/0020_camera_front_right_fov120.json

{

"filename": "0020_camera_front_right_fov120.jpg",

"objects": [

{

"geometry": {

"front": [

[

34.75687237348936,

658.0078896244875

],

[

34.75687237348936,

688.3078482508838

],

[

53.18674411531805,

688.3078482508838

],

[

53.18674411531805,

658.0078896244875

]

],

"left": [

[

53.18674411531805,

658.0078896244875

],

[

53.18674411531805,

688.3078482508838

],

[

66.38731633408966,

684.2430490124398

],

[

66.38731633408966,

658.2528844578719

]

],

"rear": null,

"right": null

},

"type": "Truck",

"visibility": "Mostly_occluded/truncated"

},

{

"geometry": {

"front": null,

"left": null,

"rear": [

[

93.69251335604012,

650.4652889553936

],

[

93.69251335604012,

701.5001321609686

],

[

132.62631126544778,

701.5001321609686

],

[

132.62631126544778,

650.4652889553936

]

],

"right": [

[

132.62631126544778,

650.4652889553936

],

[

132.62631126544778,

701.5001321609686

],

[

139.99216492398438,

699.921734948425

],

[

139.99216492398438,

650.202222753303

]

]

},

"type": "Truck",

"visibility": "Occluded/truncated"

},

{

"geometry": {

"front": null,

"left": null,

"rear": [

[

157.7852186189789,

656.8279823638785

],

[

157.7852186189789,

713.6388979136929

],

[

207.03760034027715,

713.6388979136929

],

[

207.03760034027715,

656.8279823638785

]

],

"right": null

},

"type": "Car",

"visibility": "Mostly_occluded/truncated"

},

{

"geometry": {

"front": null,

"left": null,

"rear": [

[

46.05007807090621,

669.2812749589532

],

[

46.05007807090621,

844.4359039481835

],

[

233.73706552273907,

844.4359039481835

],

[

233.73706552273907,

669.2812749589532

]

],

"right": null

},

"type": "Car",

"visibility": "Fully_visible"

},

{

"geometry": {

"front": null,

"left": [

[

231.12046233003215,

606.9336199284

],

[

231.12046233003215,

704.9802327877453

],

[

238.24741647394694,

713.0164078524977

],

[

238.24741647394694,

607.2637946120796

]

],

"rear": [

[

238.24741647394694,

607.2637946120796

],

[

238.24741647394694,

713.0164078524977

],

[

307.6968938258633,

713.0164078524977

],

[

307.6968938258633,

607.2637946120796

]

],

"right": null

},

"type": "Bus",

"visibility": "Fully_visible"

},

{

"geometry": {

"front": null,

"left": [

[

383.92442724412456,

654.9033796654878

],

[

383.92442724412456,

707.5439562179057

],

[

413.26023793449497,

709.3155140826506

],

[

413.26023793449497,

654.9323532653152

]

],

"rear": [

[

413.26023793449497,

654.9323532653152

],

[

413.26023793449497,

709.3155140826506

],

[

464.4632090308941,

709.3155140826506

],

[

464.4632090308941,

654.9323532653152

]

],

"right": null

},

"type": "Car",

"visibility": "Fully_visible"

},

{

"geometry": {

"front": null,

"left": null,

"rear": null,

"right": [

[

341.8415110008989,

650.3620116872476

],

[

341.8415110008989,

683.7588832769164

],

[

414.5675932126122,

677.167395463166

],

[

414.5675932126122,

650.1422954267891

]

]

},

"type": "Car",

"visibility": "Occluded/truncated"

},

{

"geometry": {

"front": null,

"left": [

[

1864.8000000000002,

741.6000000000001

],

[

1843.2000000000003,

725.6000000000001

],

[

1843.2000000000003,

631.7380952380952

],

[

1864.8000000000002,

631.7380952380952

]

],

"rear": [

[

1864.8000000000002,

631.7380952380952

],

[

1889.2857142857138,

631.7380952380952

],

[

1889.2857142857138,

741.6000000000001

],

[

1864.8000000000002,

741.6000000000001

]

],

"right": null

},

"type": "Two wheeler",

"visibility": "Weakly_occluded/truncated"

},

{

"geometry": {

"front": null,

"left": null,

"rear": [

[

263.59999999999997,

661.6

],

[

263.59999999999997,

720.8

],

[

241.6,

720.8

],

[

241.6,

661.6

]

],

"right": null

},

"type": "Two wheeler",

"visibility": "Fully_visible"

},

{

"geometry": {

"front": [

[

1139,

841

],

[

1139,

671

],

[

1165,

671

],

[

1165,

841

]

],

"left": [

[

1165,

841

],

[

1165,

671

],

[

1286,

669

],

[

1286,

824

]

],

"rear": null,

"right": null

},

"type": "Two wheeler",

"visibility": "Weakly_occluded/truncated"

}

]

}When you submit the inference results, please compress the three folders (1fcb7ae96a6c6c5eefe088f37a7e280e, 7f1a61133eeeaa7a3f98bb65303548a7 and edc1a8dc013a842e8d2b43016766689e) containing only .json file (id assignment and other property) into a .zip file. Do not compress image files. You will have trouble submitting your results when uploading other types of files. There is an example of a .zip file in samples/submission_folder_tree_example based on test scenarios.

In [11]:

!tree samples/submission_folder_tree_example -L 1

samples/submission_folder_tree_example └── sample_submit.zip 0 directories, 1 file

In [12]:

!unzip -q samples/submission_folder_tree_example/sample_submit.zip -d samples/submission_folder_tree_example

In [13]:

!tree samples/submission_folder_tree_example -L 1

samples/submission_folder_tree_example ├── 091f8cef55442f47239d6ad85ac5d319 ├── b63682f5111862fa731682eac7863471 └── sample_submit.zip 2 directories, 1 file

Download the MCMOT Dataset